📘 This content is part of version: v1.0.0 (Major release)In this chapter, you will learn approaches for protein structure prediction from amino acid sequences.

Protein structure and function¶

A lot of sequences have become available over the past decades. However, for most of them, we do not yet know what proteins they represent, and what functionality they have.

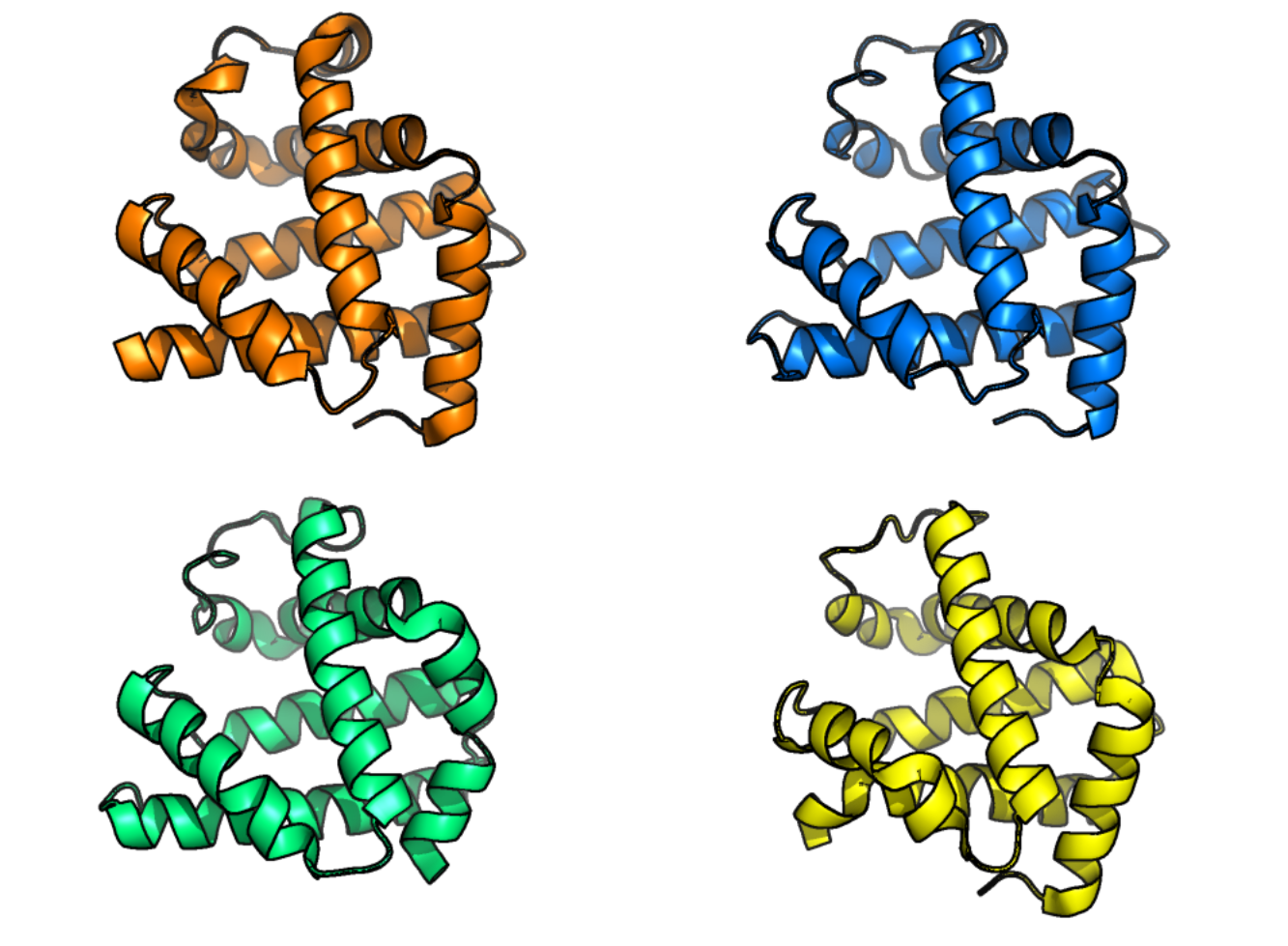

Proteins are essential for life on earth. They have many kinds of protein functions in organisms such as supporting tissue, organ, or cell structure (e.g., keratin in our skin), performing enzymatic reactions (e.g., Ribulose-1,5-bisphosphate carboxylase-oxygenase, a.k.a. Rubisco, in plants), or receptors for transduction of signals that mediate cell-to-cell communication. As discussed in chapter 1, a very small amount of ~20 amino acid building blocks form the basis of a structurally very diverse protein repertoire in all life forms. Whilst amino acid sequences are abundantly available these days, either directly through proteomics measurements, or indirectly through translated genomic sequences. In chapter 1 you have also learned that proteins are created as a chain of amino acids held together by peptide bonds, i.e., a polypeptide chain (the primary structure), that folds into a three-dimensional (tertiary) structure, based on various types of interactions between amino acid side groups. Usually, during this folding process, shorter stretches of local 2D (secondary) structures form first, held together by hydrogen bonds. Interestingly, whereas the amino acid sequence of proteins may differ, their folding may still result in comparable 3D structures of the polypeptide chain – with comparable or even similar functionality that is conserved at a evolutionary timescale (see Figure 1). Finally, several folded polypeptide chains may form a quaternary complex. The protein folding process is important, as it determines the 3D structure of polypeptide chains, and misfolding can lead to misfunctioning of the protein, for example by non-specific binding to other proteins, causing a disease in humans, or a less-performing mutant in plants. Altogether, to understand the function of proteins, knowing their 3D structures is key. Consequently, predicting protein structures based on protein sequence information has been a topic of high interest and relevance to biochemists and scientists in general for many decades.

Figure 1:Protein structures of human myoglobin (top left), African elephant myoglobin (top right, 80% sequence identity to human protein sequence), blackfin tuna myoglobin (bottom right, 45% sequence identity to human protein sequence), and pigeon myoglobin (bottom left, 25% sequence identity to human protein sequence). Myoglobin can be found in muscles and its main function is to supply oxygen to muscle cells. The protein structures in this figure illustrate how structure can be largely the same even for sequences that are quite different. Credits: Rubiera (2021).

Experimental protein structure determination¶

While current bioinformatics methods can generate many hypothetical protein sequences from genome-sequenced organisms, it is still hard and expensive to determine the corresponding 3D protein structures experimentally. The main traditional experimental analytical techniques used are nuclear magnetic resonance (NMR) spectroscopy and X-ray crystallography. The former results in useful but often noisy measurements as multiple structural conformations (i.e., the spatial arrangement of its constituent amino acids that together form the 3D shape) are generated; whereas the latter is more accurate, but also more expensive. Furthermore, it can take a year or even longer to fully elucidate the protein 3D structure from the data, and some structures cannot be measured at all, for example due to crystallization problems of the involved protein. Fortunately, fueled by recent technical advances, biological sequence data has become widely available, mostly in the form of genomic sequences. By translating these DNA sequences into possible amino acid sequences using the genetic code you have learned about in chapter 1, amino acid sequences can be inferred and theoretical proteins can be predicted. However, the sheer number of different biological sequences makes manual analysis of such predicted protein sequences too daunting. Thus, alternative methods to derive 3D protein structures are needed to interpret the large amount of biological sequence data that has become available in the recent decades.

The sequence-structure-function paradigm¶

The sequence-structure-function paradigm states that, in principle, all information to predict the folding of a protein, and thus its 3D structure and ultimately its function, is stored in its primary sequence. In practice, however, predicting structure from its sequence turned out to be a very complex and challenging task. One of the reasons that predicting the structure and function of proteins based on their sequence is more complex than the paradigm states is due to the occurrence of both short- and long-range interactions between protein local, secondary (2D) structure elements. These interactions typically form anchor points upon which the tertiary (3D) structure is based. This chapter first describes 2D structure assignment and prediction, after which 3D structure prediction approaches are discussed, including the main challenges and the three zones of tertiary structure prediction. It ends with the most recent approaches to predict and compare tertiary structures: AlphaFold and Foldseek.

Secondary protein structure prediction¶

Proteins consist of several locally defined secondary structure elements of which alpha helices (α-helices) and beta strands/sheets (β-strands/sheets) are the most commonly occurring ones. Please note here that a β-strand refers to one side of the β-sheet, where two strands come together to form the sheet structure (as we have learned in chapter 1). In this section, we will cover how to assign these two secondary structure elements using 3D protein structures, and how to predict them based on sequence data. Furthermore, we will cover the prediction of two biologically relevant “special cases” of secondary structure elements: transmembrane sections and signaling peptides.

Secondary structure assignment: labelling and accuracy¶

If we consider the 3D structure of folded protein chains, we typically observe that the structurally more ordered parts of their 3D structures contain more α-helices and β-strand stretches than the more randomly shaped parts. As these 2D elements fold locally, they can initiate folding of more complex tertiary folding patterns. Indeed, the presence/absence of α-helices and β-sheets and their specific conformation helps to categorize proteins. As a result, databases exist that categorize proteins according to specific fold types, in a categorized, hierarchical way. Therefore, the assignment of amino acid residues to either α-helix, β-strand, or “random coil” (i.e., “other”), based on the 3D structure of a protein chain, can be seen as a first step to understand the protein structural configuration. Also, when we need training data to predict secondary structure elements based on the primary (1D) sequence, we would need sufficient training data with labels based on actual structural assignments. Hence, several assignment tools were developed to replace the previously discussed daunting task of manual assignment of secondary structure elements based on known 3D information.

The three options for an amino acid residue as mentioned above would translate into a so-called “three-state model”, (α-helix, β-strand, or other) used by secondary structure assignment tools such as DSSP, PALSSE, and Stride. These tools use 3D structures as an input to assign three secondary structure labels. You will get hands-on experience with the interpretation of the outcome of these tools during the practical assignments. Please note that there are additional – less frequently occurring – secondary structure elements that could be recognized, such as the β-turn, a sharp bend in the protein chain, and several special helices. As a result, some tools will return eight states or even more. However, these can also be grouped in the three original states listed above.

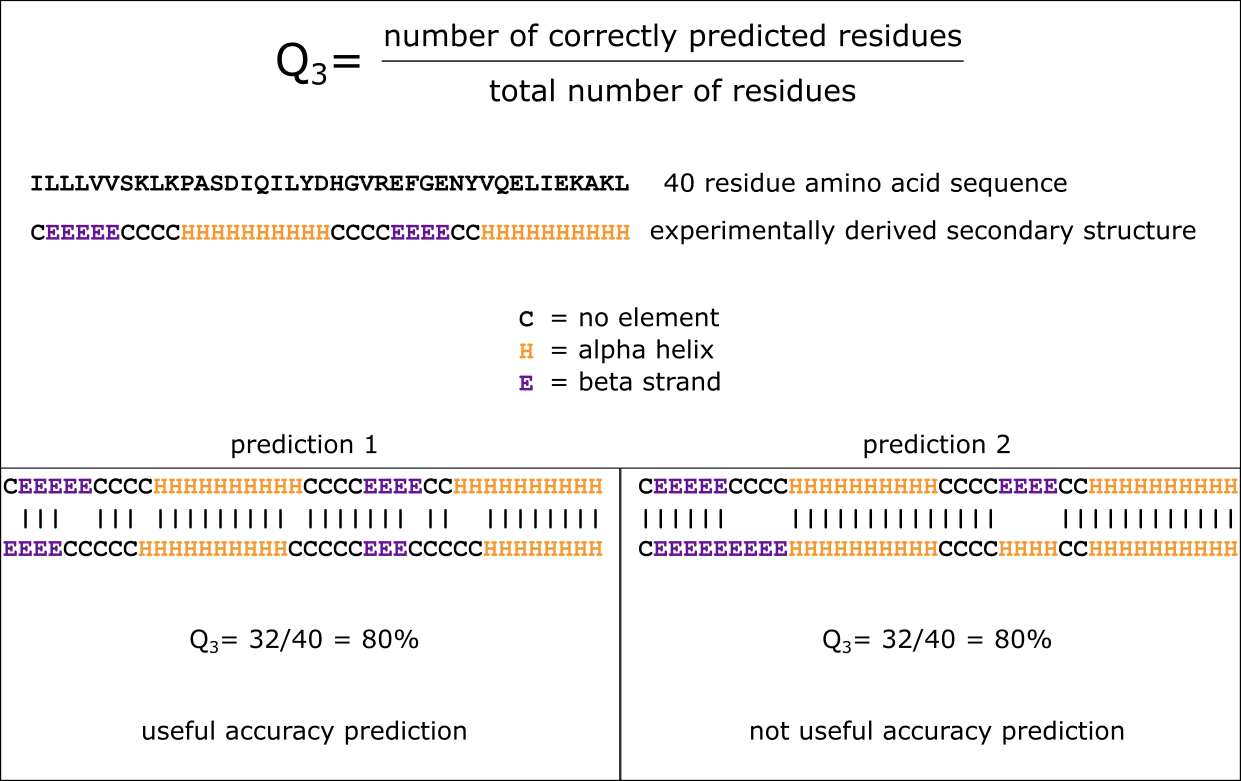

Assigning or predicting the secondary structure of a protein is only useful if you have an idea of the accuracy of the assignments/predictions stemming from a given tool. Any accuracy prediction or measurment can help to estimate the performance of a tool when presented with a sequence of an unknown structure. Accuracy can be measured either with respect to individual residue predictions or in relation to the numbers of correctly predicted helices and strands. One commonly used and relatively straightforward method to assign accuracy to individual residues is the Q3 measure. The values of Q3 can range between 0 and 100%, with 100% equalling a perfect prediction. The value for a completely random prediction depends on the number of different labels or “states” we predict: if we predict in the “three-state model”, a random prediction would return 33% for Q3. Please note that the same Q3 score can mean completely different things when studying the actual alignment between the predicted secondary structure assingments with the correct ones (Figure 2); hence, the values need to be considered together with the actual sequence alignments. Whilst it may seem natural to strive for a perfect prediction, the maximum Q3 that is generally achievable is ~80%. This is mainly caused by difficulties in defining the start and end of secondary structure elements: the different tools that assign or predict secondary structure may well deviate at the borders of the predicted elements. Even as a human, manual annotation of secondary structure elements may pose challenges on which residues are inside or outside secondary structure elements. During the practical assignments, you will explore this phenomenon more.

Figure 2:The Q3 measure produces useful accuracy predictions when the resulting secondary structure prediction contains a slight shift compared to the actual structure (prediction 1). It is however not useful when the secondary structure elements have been interpreted incorrectly (E -> H in prediction 2). Credits: CC BY-NC 4.0 Ridder et al. (2024).

Secondary structure prediction: statistical and machine-learning based approaches¶

Here, we will describe approaches that have emerged over the past decades to predict secondary structure elements on the basis of sequence data alone. As these approaches form the foundation for tertiary structure prediction tools, we will study them first.

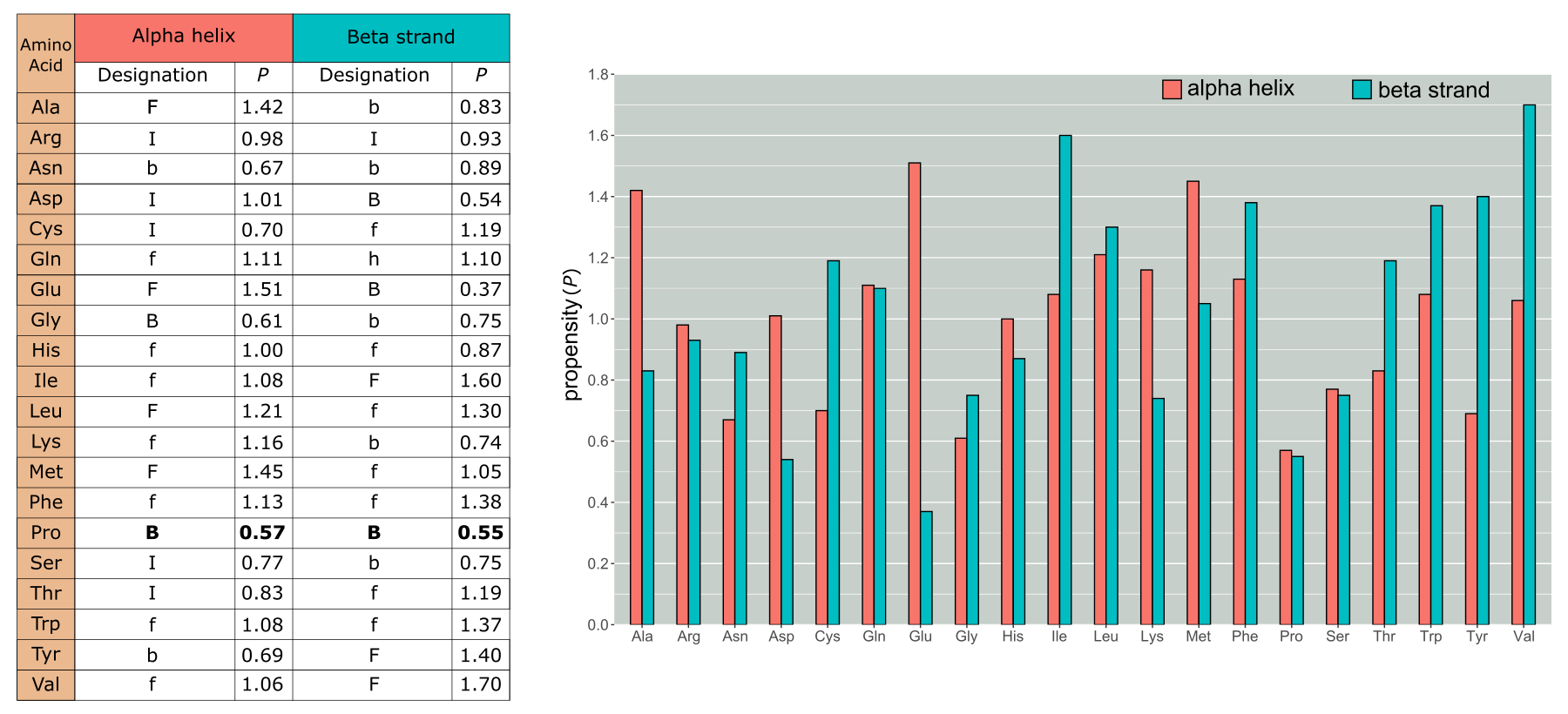

One of the first methods to predict secondary structures used statistics to infer a residue’s secondary structure and was the so-called Chou-Fasman approach. Since the 1970s, this strategy has been using an increasing set of reference protein 3D structures and 2D structure assignments to determine the natural tendency (propensity) for each amino acid type to either form, break, or be indifferent to form or break an α-helix or β-strand (see Figure 3). If we consider a stretch of amino acids, these propensities help to determine if and where an α-helix or β-strand starts or stops. For example, some amino acids have a strong tendency to form α-helices (e.g., Alanine) or β-strands (e.g., Isoleucine), whereas others tend to break these local structures. In particular, we can observe that Proline is a strong breaker of both structure elements. This can be explained by the special side group arrangement of Proline: this is fused twice to the backbone of the protein, rendering the amino acid very inflexible when it comes to the phi (φ) and ψ (psi) angles it can render (see chapter 1 for more information on phi and psi angles). Another amino acid that that tends to break alpha helices and beta strands is Glycine. Whilst now superseded, first by more accurate statistical methods and more recently by machine learning-based methods, the Chou-Fasman approach very elegantly demonstrates how the side groups of amino acids impact their tendency to form specific structures.

Figure 3:Chou and Fasman Propensities (P). F stands for strong former, f weak former, while B and b stand for strong and weak breaker, respectively. I (indifferent) indicates residues that are neither forming nor breaking helices or strands. We can see that Pro has the lowest propensity for forming a helix and a low one for strands as well. However, many other residues that are either weak or indifferent have been reclassified since the propensities shown here have been reparameterized as more data have become available. Credits: CC BY-NC 4.0 Ridder et al. (2024).

In subsequent decades, several statistics-based methods were developed that improved sequence-based predictions of secondary structure elements. They, for example, started to include information of multiple sequence alignments (MSA – a concept introduced in chapter 2 and used in chapter 3) including residue conservation: such approaches first matched the query sequence to database sequences with known 3D structures and assigned secondary structure elements. Then, using the best matching sequences, the secondary structure state of amino acid residues in the query sequence stretch are inferred by averaging the states from the best matching sequences found, further adapted using additional information such as the conservedness of the residue. An example of such an approach is Zpred. In general, the use of multiple sequences and additional information about the amino acid residue’s physicochemical properties and evolutionary conservation greatly enhanced the prediction performance. In the 2000’s, machine learning took over in the form of neural networks. Such approaches use a so-called sliding window that encompass multiple amino acid residues of which the central one’s state is predicted using a model. They typically result in probabilities for each state that can be used to assign the most likely state, i.e., alpha-helical, beta-strand, or random coil (in a three-state model). Examples of such approaches are Jnet and RaptorX.

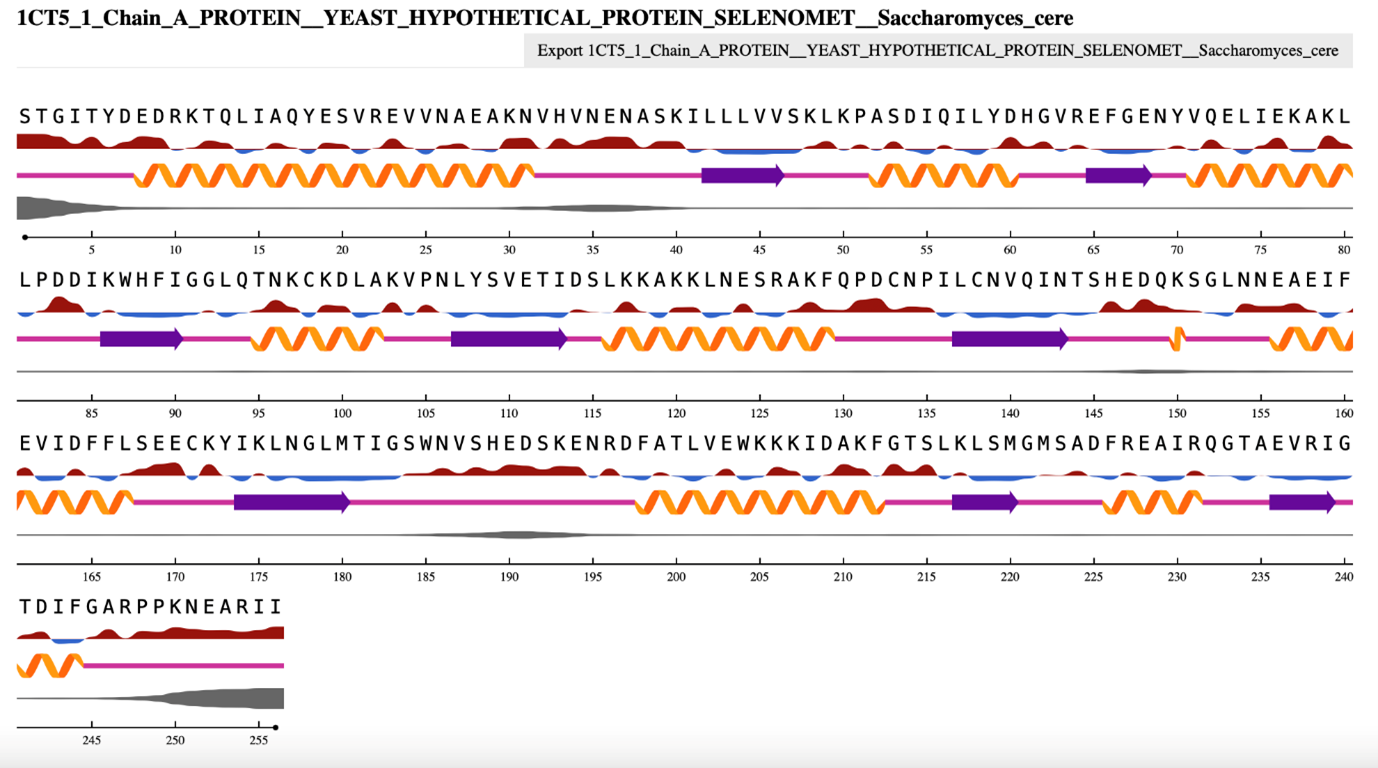

Most recently, deep learning approaches have been introduced to predict secondary structure elements based on sequence information. The state-of-the-art approach is NetSurfP, of which version 3 is currently available. You will gain hands-on experience with NetSurfP 3.0 during the practical assignments. Here, we will briefly explain how it works and how to assess its results. The prediction tool uses a deep neural network approach to accurately predict solvent accessibility and secondary structure using both three- and eight-state definitions, amongst other properties. To make this approach work, sufficient training data of protein chains with known PDB 3D structures is needed. From some protein sequences, there are many close variants present in the database, resulting in the abundant presence of some protein sequence stretches. To avoid over-fitting the model on predominant sequence stretches, each protein sequence that had more than 25% sequence identity to any other protein sequence already in the test set was removed. To ensure good quality data, a resolution of 2.5 Angstrom or better was selected for. This resulted in ~10,000 protein sequences used for training. To obtain “ground truth”, DSSP was used (see Secondary structure assignment) to calculate properties such as solvent accessibility and secondary structure states from the corresponding protein structures, resulting in a data set for training labeled with these properties. The parameters of the neural network were trained using small batches of protein sequences and their “ground truth” to result in a final model.

Figure 4:NetSurfP 3.0 output for a yeast protein that contains both α-helical as well as β-strand sections. Credits: Høie et al. (2022). The 3D structure of this protein was obtained from: Eswaramoorthy et al. (1999).

As we have seen in chapter 1, there are key similarities and differences between α-helices and β-sheets. Both secondary structure elements rely on hydrogen bonds between backbone atoms in the polypeptide chain. However, whereas residues involved in α-helices only have local interactions in the chain, β-sheet residues can have long-range interactions. Consequently, β-sheets are more difficult to predict for sequence-based prediction tools. The availability of sufficient homologous proteins can alleviate this bottleneck and provide reliable predictions of β-sheets as well. Also, the development of 3D structure prediction tools (see Tertiary protein structure prediction) is expected to lead to further improvements in predicting secondary structure elements and other per-residue properties like surface exposure/solvent accessibility based on sequence information alone.

Predicting transmembrane protein sections¶

In most cellular proteins, amino acid residues with hydrophobic side chains are found buried inside the protein 3D structure, thus effectively shielded from the hydophilic (polar) environment inside the cell. Cells are surrounded by a membrane that literally separates the inside of the cell from the outside world. Hence, if messages need to be passed on from outside to inside the cell, or vice versa, these messages will need to pass the membrane. The cellular membrane is composed of a lipid bilayer that exposes its hydrophilic headgroups into the outside and cellular environments and that points its hydrophobic acyl chains towards each other. To do so, the cellular machinery is using proteins to assist in signal transduction across the membrane. Where globular proteins are present within the cell, so-called transmembrane proteins span the membrane at least once. It is estimated that ~30% of the proteins in eukaryote cells are transmembrane, indicating their functional importance. The specific environment of the membrane in terms of its size (the thickness of the lipid bilayer) and polarity (the hydrophilic outsides and hydrophobic inside), only some local structural configurations are typically found to be able to span the membrane. These configurations are typically linked to the protein’s function: be it a receptor for signal transduction or a transporter of specific substances across the membrane.

Let us consider size first: the average thickness of a membrane is ~30 angstrom (Å), which corresponds to an α-helix of between 15 and 30 residues to make it fit within the membrane layer.

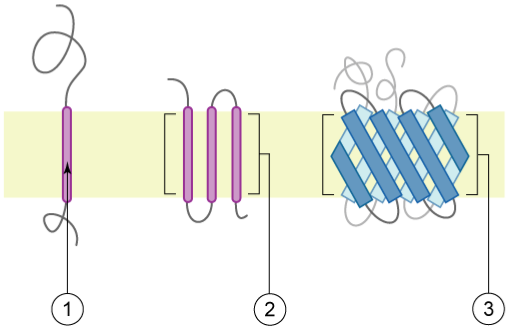

Figure 5:Schematic representation of proteins with transmembrane sections, also called transmembrane proteins, with the membrane represented in light yellow: 1) an alpha-helix containing protein spanning the membrane once (single-pass) 2) an alpha-helix containing protein spanning the membrane several times (multi-pass) 3) a multi-pass membrane protein containing β-sheets. Credits: CC BY 2.5 Foobar (2006).

The simplest local transmembrane element is an alpha-helix of ~ 15-30 amino acid residues with mainly apolar side groups (Figure 5, 1). The length is restricted by the thickness of the lipid bilayer, whereas the apolar side groups will have favorable interactions with the acyl chains of the lipids in the membrane. A transmembrane protein can span the membrane one or more times (i.e., Figure 5, 1 & 2), and in some cases a special configuration of the transmembrane proteins creates a “pore”-like structure. Here, some helical residues can be charged as the pore environment is completely shielded from the membrane bilayer. Another commonly used transmembrane configuration is the “beta-barrel”. This element consists of 8 – 22 transmembrane β-strands (although larger ones with even more β-strands may well exist) that together form a “barrel shape” (Figure 5, 3), effectively separating the inside of the barrel from the outside, thereby creating a pore in the membrane. Such pores can be sealed off with a “switch” in the form of a protein stretch that is either in the “open” or “closed” configuration.

Signaling peptides¶

The place where proteins are built in the cell is usually not where they act. To exert their function at the right place, proteins need to be transported from where they are folded and formed. The cellular machinery has developed a signaling system that use “peptide tags” to enable effective transport of polypeptide chains to their site of action. Such “tags” are called signal peptides. These are peptide recognition signals for the cellular transporter machinery. Typical sites of actions for which signal peptides exist include the cell membrane and the endoplasmic reticulum. Furthermore, signal peptides can steer proteins to be secreted from or imported into lysosomes. Typically, after arrival of the protein at its target location, the signal peptide sequence is enzymatically removed from the protein. Hence, the presence of such a signal peptide can provide important clues as to what the site of action of a protein may be based on its amino acid sequence.

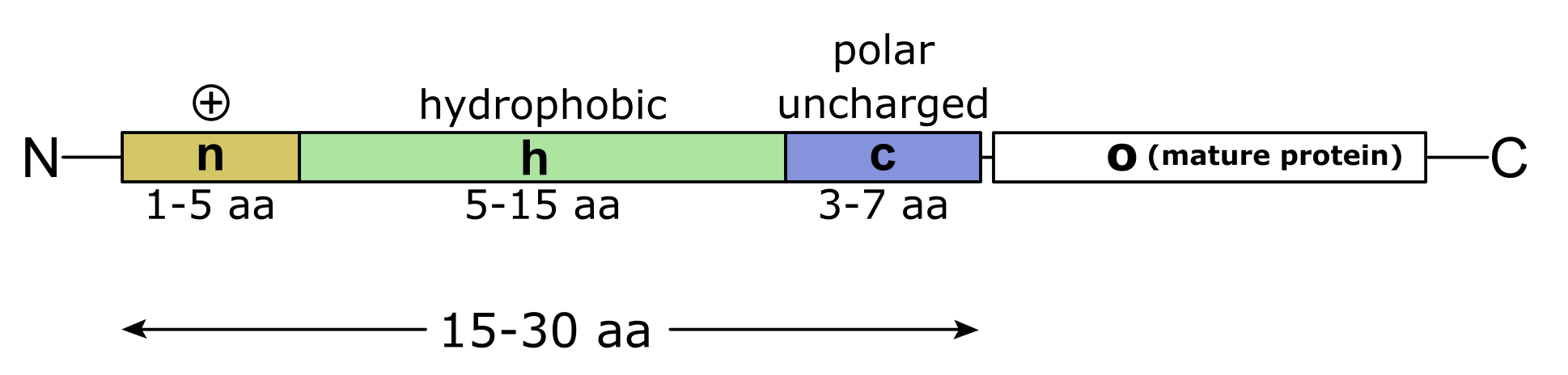

The signal peptide is an N-terminal leader amino acid sequence that consists of ~ 15-30 residues at the N-terminus of the protein sequence (Figure 6). The actual recognition of signal peptides by the cellular transporter machinery is not based on a conserved amino acid sequence, but it largely depends on the physicochemical properties of the amino acids in the signal peptide. A signal peptide typically consists of three regions: the first region (the n-region) usually contains 1–5 positively charged amino acids, the second region (the h-region) is made up of 5–15 hydrophobic amino acids, and the third region (the c-region) has 3–7 polar but mostly uncharged amino acids.

Figure 6:Schematic representation of a signal peptide and its positively charged N-terminal, hydrophobic core-region (h-region), the polar (mostly) uncharged c-region, and the mature protein (o). Credits: CC BY-SA 3.0 modified from Yikrazuul (2010).

Sequence-based prediction of transmembrane sections and signal peptides¶

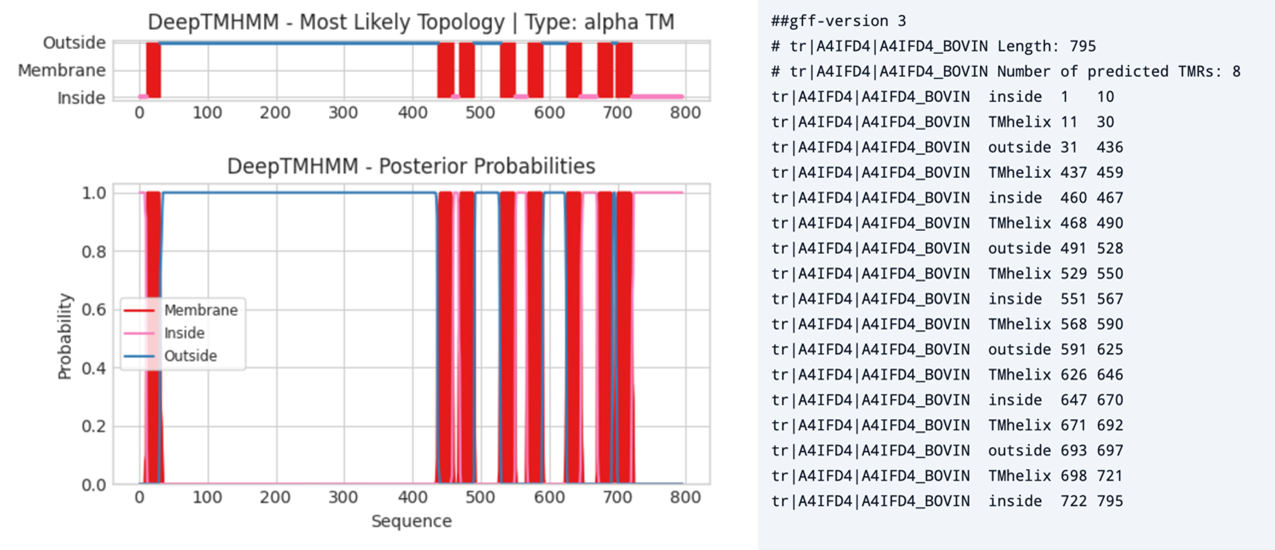

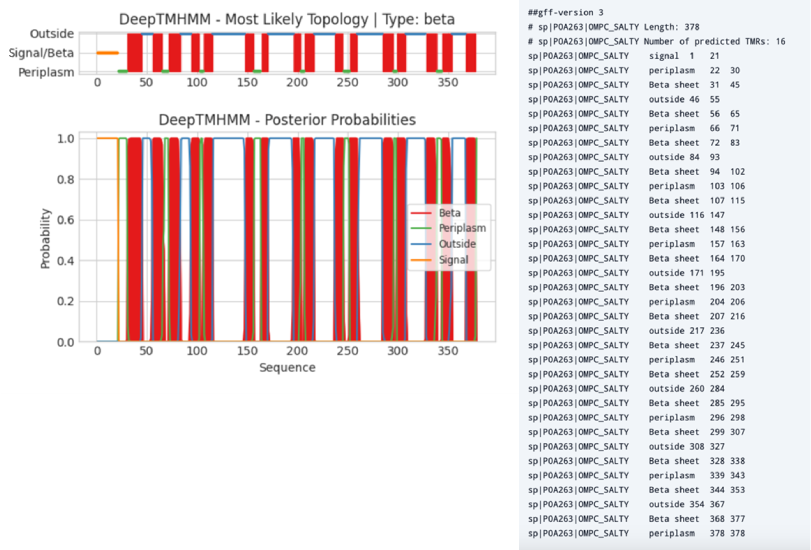

Given their functional clues, the accurate prediction of transmembrane sections and signal peptides based on amino acid sequence alone is very advantageous when studying the possible functions of unknown proteins. DeepTMHMM is currently the top-performing tool to predict transmembrane sections and signaling peptides in protein sequences. The program predicts several labels for each amino acid in a sequence: signal peptide (S), inside cell/cytosol (I), alpha membrane (M), beta membrane (B), periplasm /(P/) and outside cell/lumen of Endoplasmic reticulum/Golgi/lysosomes (O).

Both transmembrane sections and signal peptides are largely defined by the physicochemical properties of the amino acid residues that they constitute, rather than a conserved motif or short sequence of residues. This makes it very hard to recognize these secondary structure elements using classical methods based on alignment. Using machine learning methods, however, the characteristics of a training data set with known sequences can be learned and used for the prediction of unknown data. The trained models can subsequently judge the properties of amino acids in unknown sequences, thereby allowing the recognition of transmembrane sections and signal peptides. Hence, DeepTMHMM uses a deep learning model that takes a protein sequence as an input, and then outputs the corresponding per-residue labels. Taken all together and considering their order within the amino acid sequence, the residue labels define the predicted topology of the protein. We note how both DeepTMHMM and the below explained SignalP return probabilities (see also the conceptually similar likelihood in chapter 3) rather than absolute predictions, which is adventageous in giving a direct clue on the reliability of the prediciton. DeepTMHMM can predict five different topologies, namely alpha helical transmembrane proteins without a signal peptide (alpha TM), alpha helical transmembrane proteins with signal peptide (SP + alpha TM), beta-barrel transmembrane proteins (Beta), globular proteins with signal peptide (SP + Globular) and globular proteins without signal peptide (Globular). Importantly, the two secondary structure elements predicted here share properties and the deep learning model needed sufficient example data to differentiate transmembrane sections from signal peptides.

In Figure 7 you can observe a typical output of DeepTMHMM - alpha TM for a multi-pass transmembrane protein.

Figure 7:Left, output of DeepTMHMM - alpha TM prediction on Bovine Adhesion G protein-coupled receptor G7 (ADGRG7, A4IFD4). Right, a gff file of the same protein listing the number of transmembrane structures (α-helices), their amino acid positions, and whether the residues are inside or outside the membrane. Credits: Hallgren et al. (2022).

Figure 8 shows an example output of DeepTMHMM - beta for a beta-barrel transmembrane protein.

Figure 8:Left, output of DeepTMHMM - beta prediction on the outer membrane protein C (precursor) of Salmonella typhimurium (OMPC-SALTY, P0A263). Right, a gff file of the same protein listing the signal peptide position, the number of transmembrane structures (β-sheets), their amino acid positions, and whether the residues are in the periplasm or outside the membrane. Credits: Hallgren et al. (2022).

SignalP¶

In the previous section it was covered how DeepTMHMM can be used to predict the presence of signal peptides; however, more dedicated tools exist for the discrimination between signal peptide types, such as SignalP 6.0. This tool can predict signal peptides from sequence data for all known types of signal peptides in Archea, Eukaryota, and Bacteria. Additionally, SignalP 6.0 predicts the regions of signal peptides. Depending on the type, the positions of n-, h- and c-regions as well as of other distinctive features are predicted.

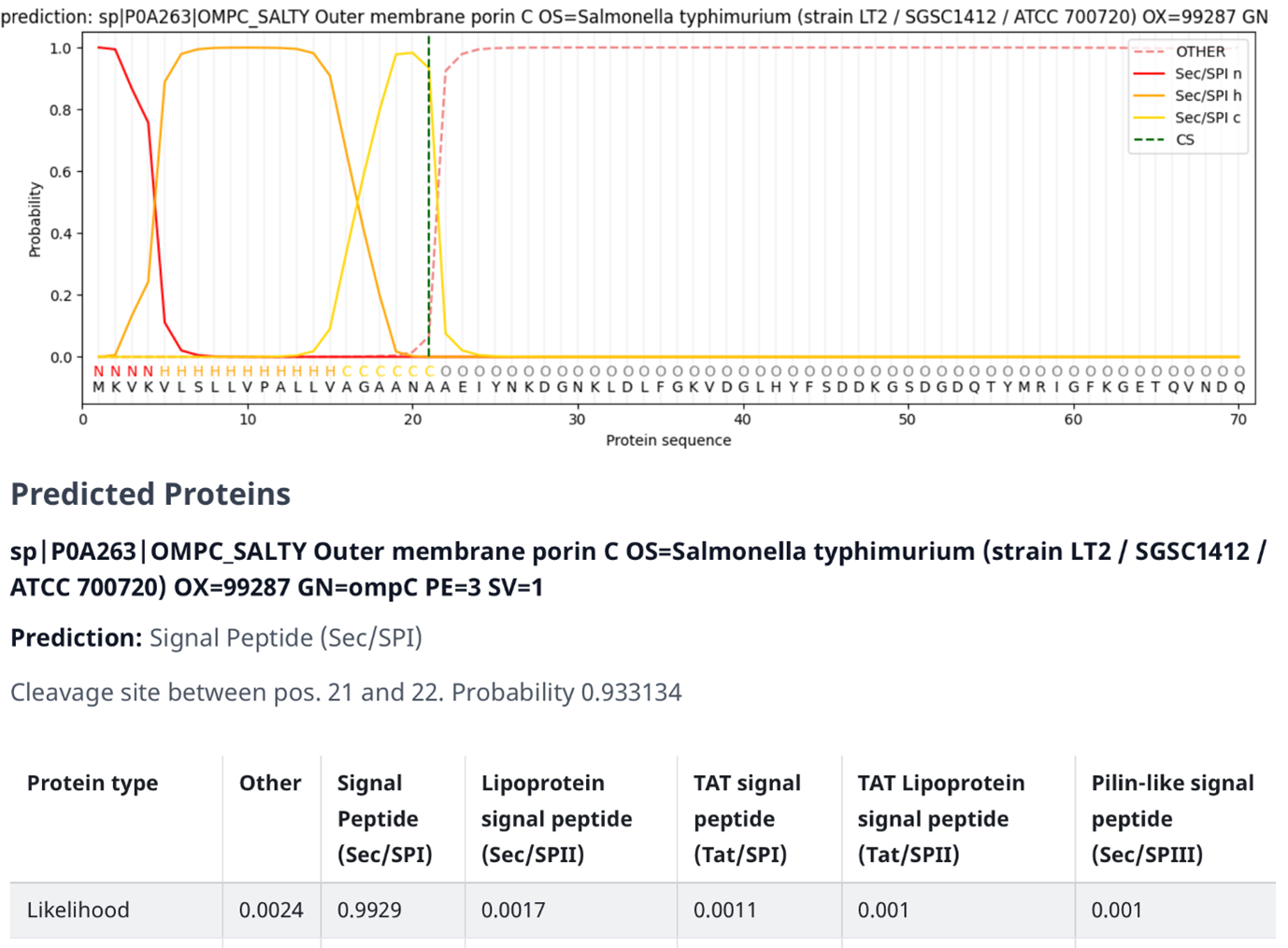

Figure 9:SignalP output on the outer membrane protein C (precursor) of Salmonella typhimurium (OMPC-SALTY, P0A263). Credits: Teufel et al. (2022).

In Figure 9 an example output of SignalP 6.0 is shown (note the resemblance of the structure of the signal peptide to Figure 6). The figure contains several elements:

The graph at the top consists of the following elements:

- The dark orange line (Sec/SPI n) indicates the probability of a specific region being identified as the N-terminus, which is also displayed as the letter “N” underneath the line.

- The light orange line (Sec/SPI h) indicates the probability of a specific region being identified as the h-region, which is also displayed as the letter “H” underneath the line.

- The yellow line (Sec/SPI c) indicates the probability of a specific region being identified as the c-region, which is also displayed as the letter “C” underneath the line.

- The dashed orange line (OTHER) indicates the probability of a specific region being identified as something other than the signal peptides subsections, such as the mature protein itself. This is also displayed as the letter “O” underneath the line.

- The dashed green line (CS) indicates the probability of a specific region being identified as the cleavage site, i.e., the point where the signal peptide gets separated from the rest of the protein sequence.

- The protein sequence underneath the letters that indicate which section a region belongs to.

The signal peptide score (the orange lines) is trained on the differentiation of signal peptides and other sequences and has a high value if the corresponding amino acid is part of the signal peptide. Therefore, amino acids of the mature protein have a low signal peptide score. The maximum cleavage score (the dashed green line) occurs at the position of the first amino acid of the mature protein, so one position after the cleavage site, The cleavage score analysis was trained on the recognition of the cleavage site between signal peptide and the protein sequence.

The standard secretory signal peptide is called Sec/SPI and it is transported by the Sec translocon and cleaved by Signal Peptidase I (Lep). There are four other signal peptide types (see also box below) but they are beyond the scope of this course. However, it is important to know that tools like signalP are able to distinguish between the different signal peptide types and make accurate predictions about their probabilities, based on probabilities.

The information below the graph in Figure 9 consists of the following elements:

- The prediction indicates the most probable type of signal peptide for the given sequence.

- The cleavage site shows the amino acid location in the protein sequence where the cleavage site is located, as well as its probability.

The table at the bottom of the page consists of the following elements:

- Likelihood/probability scores for the different types of signal peptides and the chance of it not being a signal peptide at all (Other).

Tertiary protein structure prediction¶

First, it is good to realize that the prediction of secondary structure elements has formed the foundation of tools that predict 3D structures of proteins. We will first explore the three traditional structure prediction approaches, which will be followed up by the most prominent new approach in 3D structure prediction (AlphaFold) that relies on several concepts of the traditional approaches.

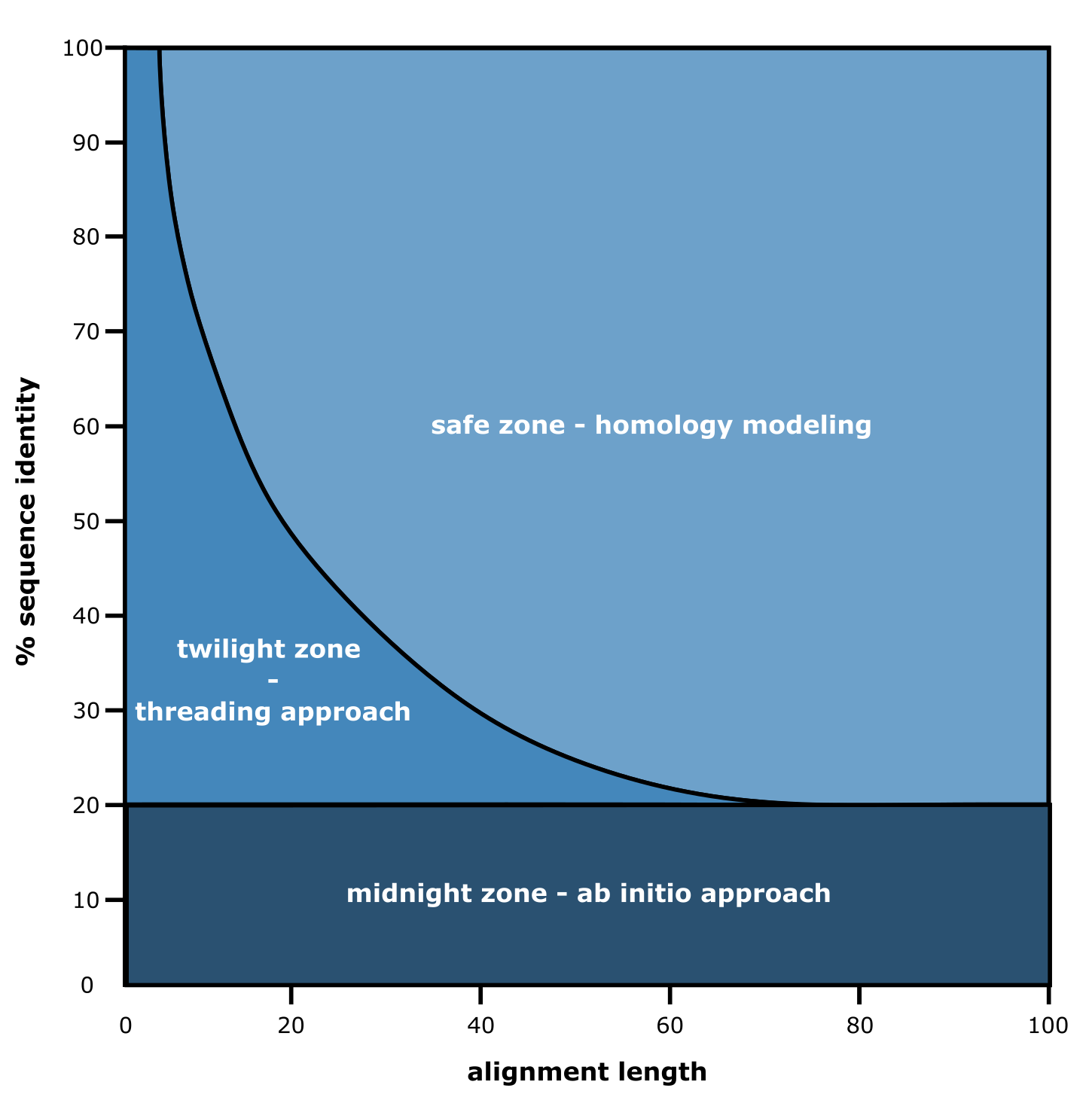

Figure 10:The three zones of tertiary structure prediction approaches. Credits: [CC BY-NC 4.0] Ridder et al. (2024).

Various approaches including ab initio, threading (also called fragment-based modelling), and homology modelling have been proposed and used to go from sequence to structure, with both sequence identity and alignment length as the most important factors to decide which approach to choose. To make an effective choice between these three traditional structure prediction approaches, a so-called three zones concept was proposed (Figure 10). According to the figure, as we can observe, below 20% sequence identity between the query protein sequence and sequences with experimentally derived structures, one needs to refer to ab initio approaches, literally translating as: from the start. As such approaches are computationally heavy and as they also require a lot of expert knowledge, they are not widely used. In essence, such approaches aim to model the protein sequence folding process using physicochemical properties of the amino acid residues and their surroundings. As the sequence length used increases, ever-increasing possible folds occur for the entire 3D structure, making it a computationally intensive task. For example, consider 100 amino acid residues that each have their psi, phi, and omega angles. If they would (only) have 3 possibilities per angle, this would lead to 3^300 (= 10^143) possible folds for the sequence. If each fold would take just 1 second to assess its likelihood to be realistic and energy-favorable, it would take us 10^126 years to analyze and come up with a suggested 3D structure, and that is just 100 amino acids under severe constraints.

Fortunately, the database of experimentally derived protein 3D structures is constantly growing. Therefore, there is a good chance of having >20% sequence identity of your query sequence. As we can see in Figure 10, the length of the sequence alignment is another crucial factor: if a shorter stretch is matching, the threading (or fragment-based) approach can be used. This approach focuses on matching these stretches to known folds, i.e., local structure often consisting of secondary structure elements. This can already help to hypothesize on the protein’s function, if a functional domain is matched to the query sequence. As this approach is also relatively computationally demanding, and newer approaches as discussed below (i.e., AlphaFold) excel in recognizing such folds, we will not gain practical experience with the threading approach during this course.

If both the query sequence identity and length of the alignment are large enough, homology modelling can be attempted to create a structure model. So-called “template sequences” have to be found in the protein structure database that are “similar enough” to serve as a structural blueprint for the 3D prediction.

Nowadays, SWISS-MODEL provides precalculated 3D homology models. It is important to note that SWISS-MODEL now also contains the AlphaFold deep learning-based models (see AlphaFold section). A key aspect of working with models is to assess how reliable they are. Since homology modelling and AlphaFold’s models have different ways of checking their reliability, it is important to notice the origin of the 3D structure models. You will learn how to do that for homology modelling and for AlphaFold models during the practical assignments.

Still, new protein sequences that with little 3D structural resemblance to existing ones are discovered almost every day, thus falling in the midnight zone of Figure 10. Hence, the scientific community has been adopting various artificial intelligence-based approaches of which AlphaFold is the most prominent one to date.

AlphaFold¶

In 2018, the DeepMind team of Google introduced a machine learning-based approach called AlphaFold, with AlphaFold 2 following in 2020, and most recentlty version 3 was released in 2024. In this reader, we will mainly discuss AlphaFold 2 and refer to it as AlphaFold. This reader describes how AlphaFold builds on previous approaches and has already had substantial impact in biochemistry and bioinformatics. When it comes to the suite of possibilities as well as the disruption AlphaFold has caused, an analogy could be made to the introduction of the smartphone: whereas previously, one needed to go to the library to find a computer and connect to the internet to get to a weather forecast, one now simply takes the phone and looks up the weather. This section explains why AlphaFold could be developed and work only in the present time, how it was compared to other approaches in a fair manner, how it relies on database search and multiple sequence alignment, and what the introduction of the AlphaFold Protein Structure Database (AlphaFold DB), that contains AlphaFold-predicted structure models, means for discovery pipelines.

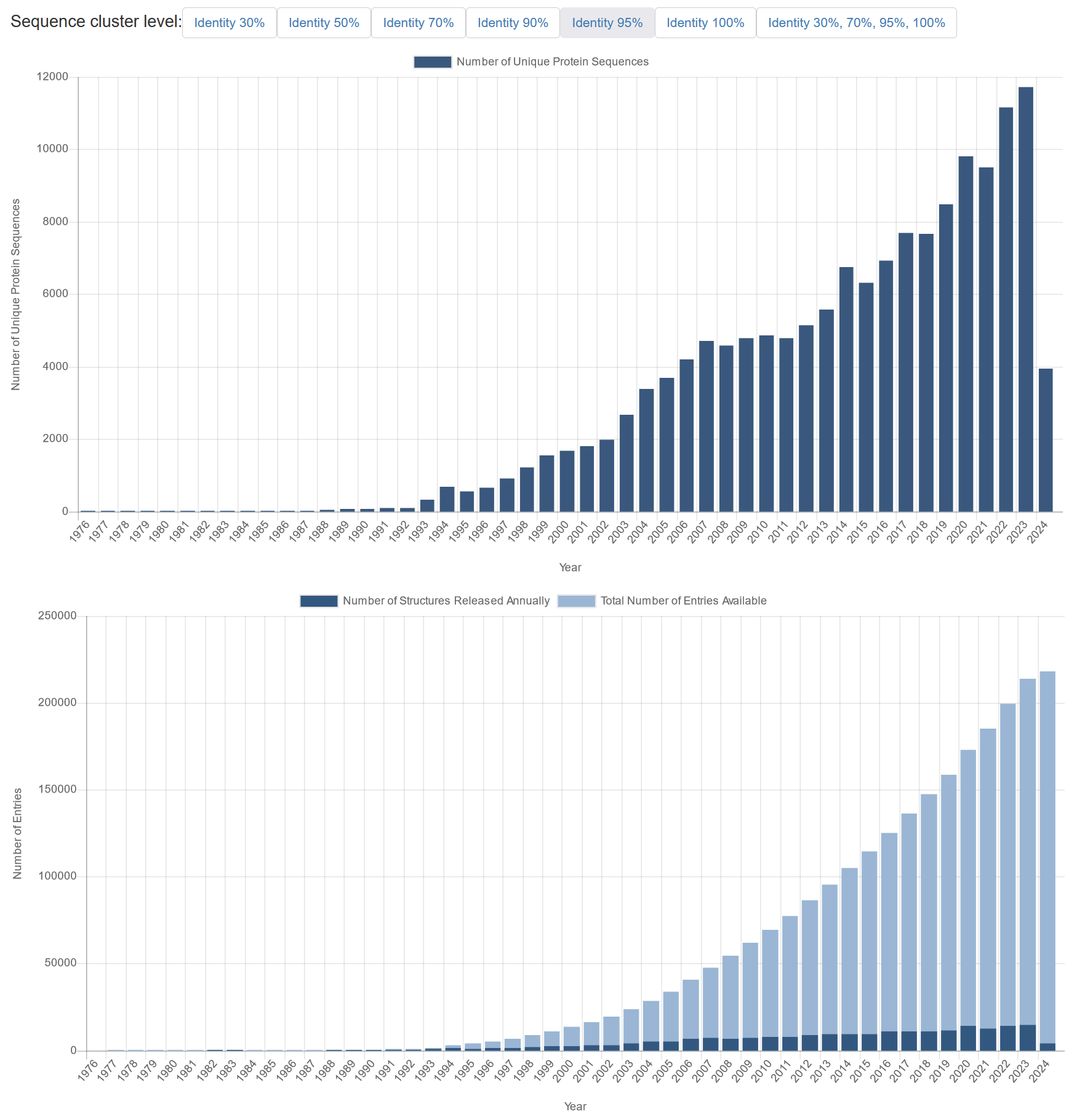

Figure 11:Top graph: number of unique 3D protein structures (based on 95% sequence identity) that have been added annually to the Protein Data Bank from 1976 – early 2024. Lower graph: Actual (dark blue) and cumulative (light blue) number of total protein entries (i.e, not grouped by uniqueness) added to to the Protein Data Bank from 1976 – early 2024. Note: the low number of structures in 2024 is caused by these statistics being taken from PDB early in 2024. Credits: CC BY 4.0 Berman et al. (2024).

The AlphaFold approach is based on machine learning, i.e., computer algorithms that fit a predictive model based on training data. Such a model can then predict the structure, when given a sequence that it has not seen before. DeepMind made use of very large neural network models, so-called deep learning. The training data for such models ideally consists of many known examples for very complex problems such as protein structure prediction. The Protein Data Bank (PDB) collects such experimental training data, i.e., measured protein 3D structures. By now, ~218,000 PDB entries are available of ~150,000 unique protein sequences at 95% sequence similarity (Figure 11, top). The latter number is important, as a sufficiently diverse set of examples will ensure that there are enough examples in the training data to recognize relevant patterns of various protein folds and other structural features.

As input for their most recent machine learning model, the DeepMind team predicted the structure of many protein sequences, and after filtering for high-quality and reliable predictions, 100,000 protein sequences were added to the training data, a technique called data augmentation. Thus, at the time of model training, the team could use around 300,000 protein sequences - 3D structure combinations to train their AlphaFold model that uses a FASTA file as input and outputs a 3D structure model that is described in the Assessing a protein structure model quality section.

The impact of AlphaFold on the biochemistry field¶

The true impact of AlphaFold would be difficult to assess without an independent test data set. Since protein folding and 3D structure prediction is one of the grand challenges of biochemistry, the Critical Assessment of protein Structure Prediction (CASP) competition was founded in 1994. CASP is a community-wide competition where research groups are required to predict 3D structures from protein sequences that do not have any public 3D structure available. More than 100 research groups worldwide join the CASP competition every two years. Using all sequence and structure data available at the present time, they predict structures for protein sequences with newly derived (yet unreleased) structures, specifically withheld from the public for the purpose of this competition.

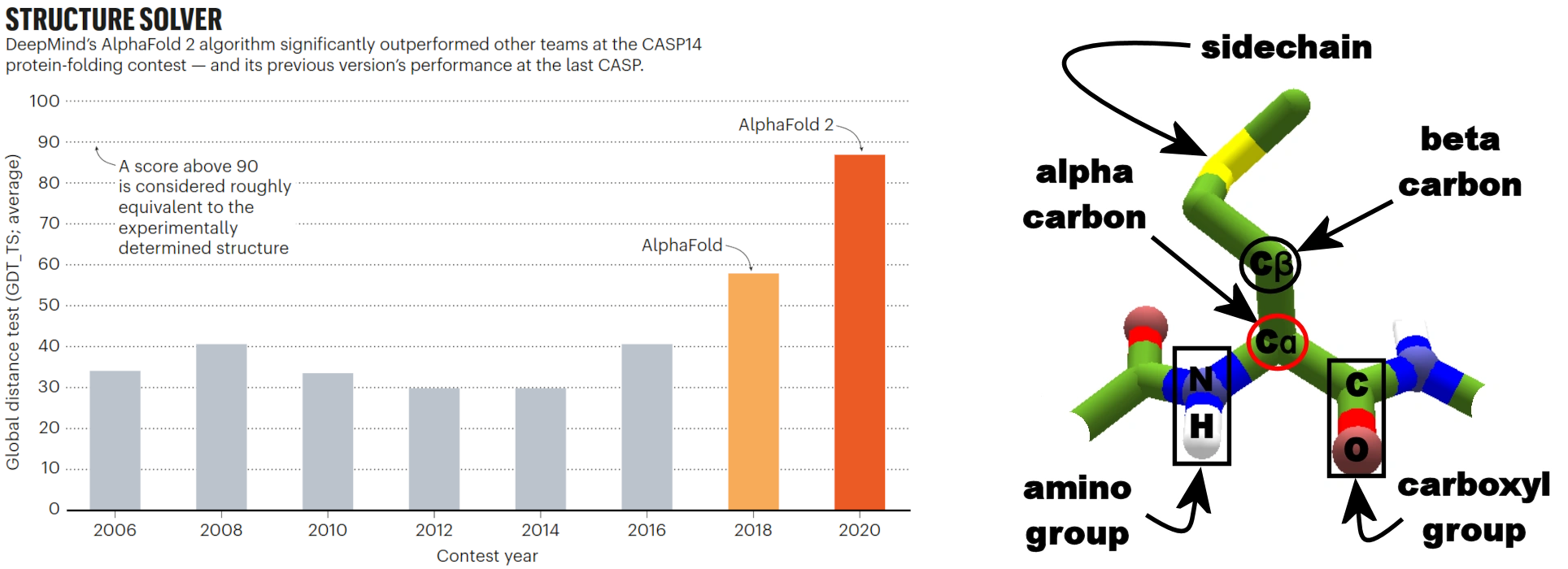

Figure 12:Left: Average GDT tests of the contest winners. Right: Schematic view of amino acid residue in protein backbone with key atoms labelled, including the alpha carbon used for GDT-TS. Credits: Left: Callaway (2020) and right: CC BY-SA 3.0 LociOiling (2018).

The left side of Figure 12plots on the y-axis the main evaluation metric that CASP uses: the Global Distance Test – Total Score (GDT-TS) as an average over the challenges (i.e., protein sequences with no publicly known 3D structures). It measures what percentage of α-carbons (Figure 12, right) of the amino acids in the predicted structure are within a threshold distance in Ångstroms (to be precise, the average of four thresholds: 1, 2, 4, 8 Å) of the known structure, for the best possible alignment of the two. Figure 12 shows how after years of stagnation, in 2018 AlphaFold clearly made a substantial improvement over the results of earlier years, thereby showing the general impact on the field that AlphaFold has made. Furthermore, the progress of the subsequent AlphaFold models is also visible in the increase in GDT-TS. In 2020, the prediction results of their updated system, AlphaFold 2, were so accurate that the structure prediction problem had been dubbed as ‘solved’ by some. In May 2024, the Google DeepMind team released AlphaFold 3. Although the results of the next iteration of CASP (CASP16) are known at the time of writing, there are no figures yet available of comparisons between AlphaFold 3 and its predecessors together with other approaches. It is expected that the results are still dominated by AlphaFold, and in the meantime new challenges include protein-ligand docking, something that AlphaFold 3 has started to predict as well. It is good to note that a score of 100 is not feasible by any predictive method, since there are areas in the protein structure that are inherently difficult to model, i.e., very flexible parts or transitions between, e.g., helix and a random coil. Hence, a score between 90-95% is considered equally well as an experimentally derived 3D structure, a score that AlphaFold 2 nearly reached.

With the above in mind, let us look at Figure 12 again. The maximum average GDT-TS score in 2020 has more than doubled since pre-2018 editions. This means that for many more protein sequences, we can gain some sort of reliable insight in their 3D structure. Following the sequence-structure-function paradigm, this also provides us insight into their possible functions. Since there are still many protein sequences with unknown functions, predictive software like AlphaFold can play a very important role in understanding their functions and roles in biochemistry. Now that we better understand the impact of AlphaFold, let us find out more about how it works.

AlphaFold under the hood¶

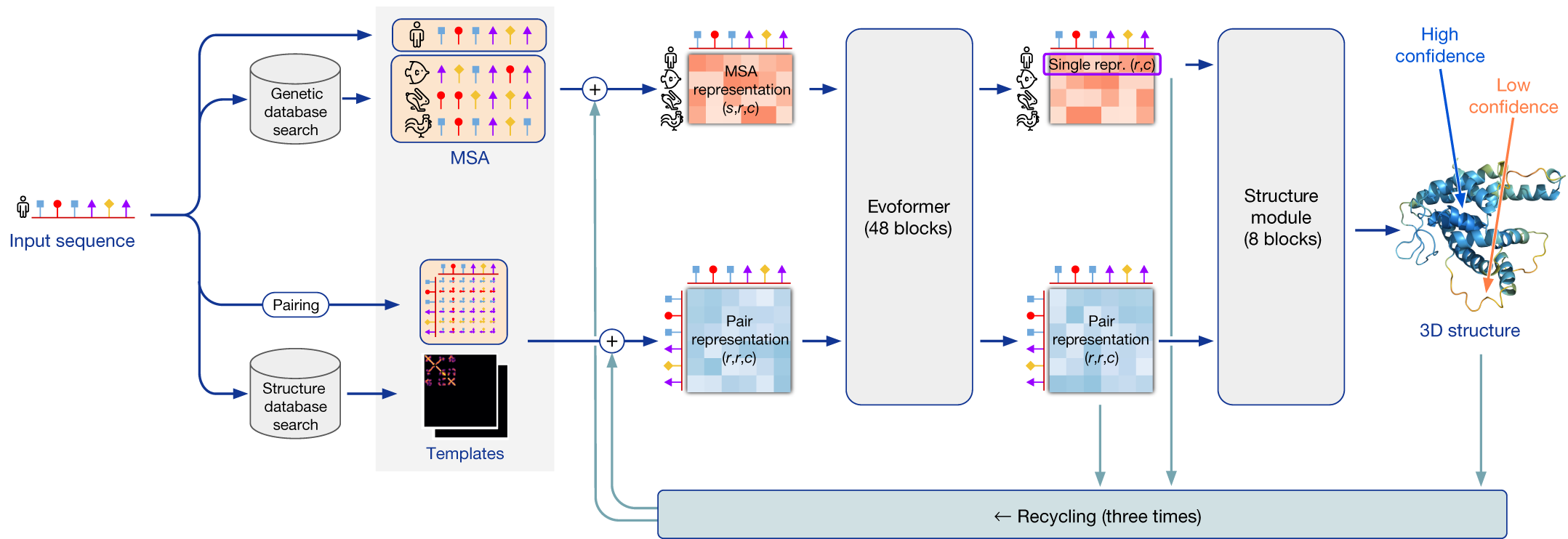

To make a prediction with AlphaFold, all you need is a FASTA file with the protein primary sequence of interest. The core of AlphaFold’s working is a sophisticated machine learning model. However, it was not built from scratch: it heavily builds on previously developed approaches to create reliable structure models. The most recent AlphaFold implementation can be summarized in three key modules that link to previous concepts and knowledge in this reader. These three modules will be explained below.

The first module processes the protein sequences into so-called numeric “representations” that can be used as input for the machine learning model. To create these representations, first a database search is performed (chapter 2) to find the most suitable sequences based on similarity. Following that, two representations are created (i.e., the upper and lower path in Figure 13): a multiple sequence alignment (MSA – a concept introduced in chapter 2 and used in chapter 3), which captures sequence variation; and a representation of how likely all residues interact with each other (i.e., that are close to each other in the 3D structure), in the form of a contact map. The database search is also used to find if there are any suitable “templates” in the PDB database. Up to four top templates can be chosen to serve as a starting position for the prediction models. Please note that this is the first step in homology modelling as well, and that AlphaFold can make “good” predictions on a good quality multiple sequence alignment (MSA - see chapter 2) alone; hence, there is no need for templates.

It is important to realize that AlphaFold bases itself largely on co-evolutionary information. Let us briefly reflect on why this is relevant for structure prediction. As you may have realized by now, the residue position in the protein primary sequence does not reflect its final position in 3D space: residues far away in the primary sequence may end up close to each other after folding and they may have specific interactions with each other that stabilize the 3D structure. The concept of co-evolution implies that if two interacting residues are important for the protein’s function, they are likely to co-evolve. In other words, if one of them changes into a different amino acid, the other will likely have to change as well to maintain the interaction to support the protein’s 3D structure. Such genomic signals can only be extracted when we compare many protein sequences with each other. Therefore, a deep MSA of high quality is essential for good predictions. Here, AlphaFold uses MSA to extract evolutionary signals and predict co-evolution of residues.

The second module uses the representations from the first module and aims to find restrictions in how the protein sequence folds into its 3D structure. This part is the actual machine learning model, and we will consider it largely as a black box. The model uses deep learning to learn which input features are important to predict the protein folding based on data-driven pattern recognition. The model passes information back and forth between the sequence-residue (MSA) and residue-residue (contact map) representations. This part requires a lot of computation time and effort and thus needs a good infrastructure that is not available to all laboratories. The DeepMind team had the powerful resources needed to train the extensive machine learning model.

The third and final module is the structure builder where the actual folding and refinement of the structure model takes place using the phi, psi, and omega angles of the amino acid atomic bonds (see also chapter 1). Furthermore, local and global confidence scores are determined. Several prediction cycles usually take place where the predicted 3D structure model serves as a new input (i.e., template) for the structure prediction to allow for further fine-tuning. The structure builder takes input from several independently trained models. This yields several 3D structure models with tiny or large differences, which are finally ranked according to the models’ confidence scores (see Assessing a protein structure model quality).

To summarize the AlphaFold process, database searches are done to construct MSAs and find templates, the exact same input is given to several identical machine learning models with slightly different parameter settings, and the structure builder creates 3D structure models for them that are ranked based on confidence scores to report the best performing model.

Figure 13:Schematic overview of AlphaFold approach. Credits: modified from Jumper et al. (2021).

AlphaFold Protein Structure Database¶

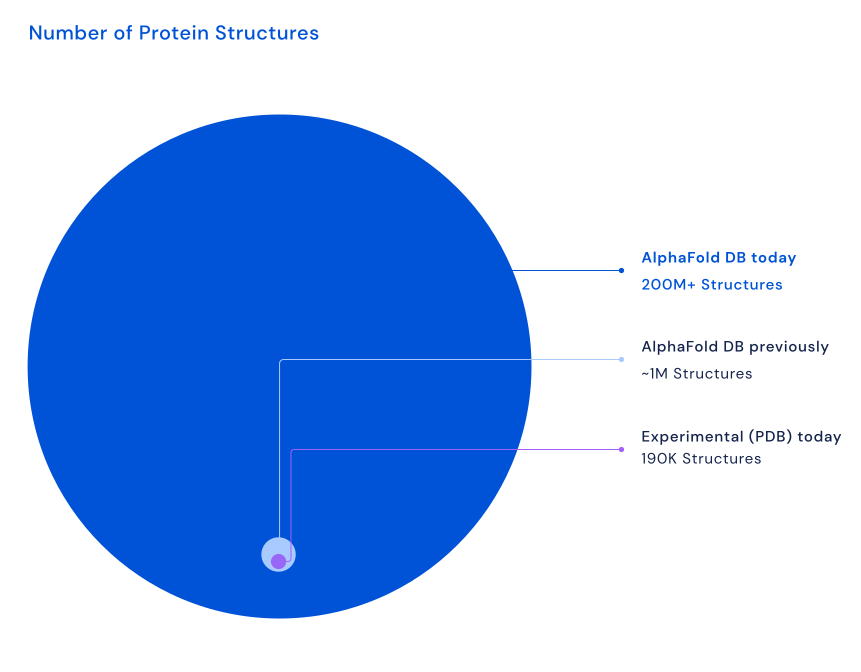

The computation of AlphaFold predictive models costs a lot of computation time and resources (see The impact of AlphaFold on biochemistry and AlphaFold under the hood). To avoid running AlphaFold over and over on the same protein sequences and to facilitate the dissemination and inspection of AlphaFold protein structure models, the DeepMind team collaborated with EMBL’s European Bioinformatics Institute (EMBL-EBI) to create the AlphaFold Protein Structure Database (AlphaFold DB). Currently, the resource contains over 200,000,000 structure models. The first AlphaFold DB release covered the human proteome, along with several other key organisms such as Arabidopsis thaliana and Escherichia coli. Actually, for these species, most protein sequences in their UniProt reference proteome were folded by AlphaFold. Subsequent releases expanded the list of included organisms. The most recent release contains predicted structures for nearly all catalogued proteins known to science, which will expand the AlphaFold DB by over 200x - from nearly 1 million structures to over 200 million structures (see Figure 14), covering most of UniProt. It is expected that in the coming years all hypothetical proteins will be added to AlphaFold DB. This will then – for example – also include viral proteins that are currently excluded.

AlphaFold DB can be searched based on protein name, gene name, UniProt accession, or organism name. In one of the practical assignments, you will learn how to work with AlphaFold DB and how you could incorporate it in your biological discovery pipeline. One important remaining question is: how do we know if we can trust the predictions? In other words, how do we know if we can be confident in the 3D structure models that AlphaFold predicts and that AlphaFold DB contains?

Figure 14:The most recent release includes predicted protein structures for plants, bacteria, animals, and other organisms, opening up many new opportunities for researchers to use AlphaFold to advance their work on important issues, including sustainability, food insecurity, and neglected diseases. Note that PDB contains experimentally validated structures (~218K nowadays) and AlphaFold produces predicted structure models. Credits: Hassabis (2022).

Protein structure model quality¶

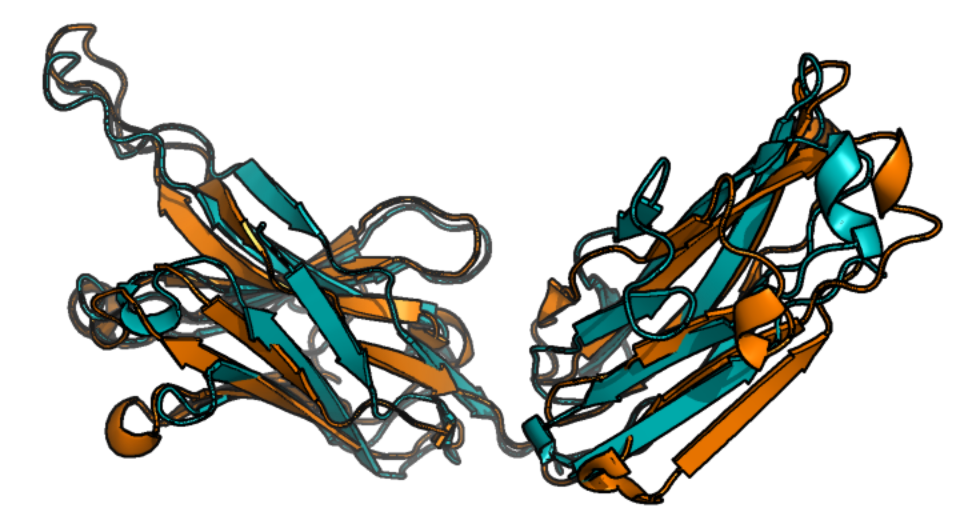

Figure 15:Heavy chain portion of the crystal structure of an antibody (PDB: 7MBF, in orange) superposed with the AlphaFold 2 prediction (in blue). The overlay view shows how the folding of the two domains is largely predicted correctly, with some parts of the 3D protein structure that fit the PDB structure better than others. Credits: Rubiera (2021).

Predictive models only have true value when they produce some measure of confidence, because without any idea of certainty about the predictions, it is hard to interpret the results and draw meaningful conclusions. To get an idea of how well predictions fit the reality, one needs to compare the model with the true situation. Figure 15 shows how this can be done by manual visual inspection of two super-imposed structures, the “real” (experimentally derived) one and a predicted one. However, to quantitatively assess differences between models, some sort of numeric score is needed. Here, we will list several of them that you will encounter during this week. In this reader, we have seen one such comparative measure for 3D protein structure models in the CASP section: the Global Distance Test – Total Score (Figure 12). Another score you may encounter is the root mean squared error (RMSE), based on the difference in position of the α-carbons as input to calculate the score. In principle, the smaller the RMSE of a model is, the better. When doing homology modelling, the QMEAN-DISCO score used by SWISS-MODEL is used as a quality measure. This score is an ensemble of various metrics that together provide insight into the quality of the model.

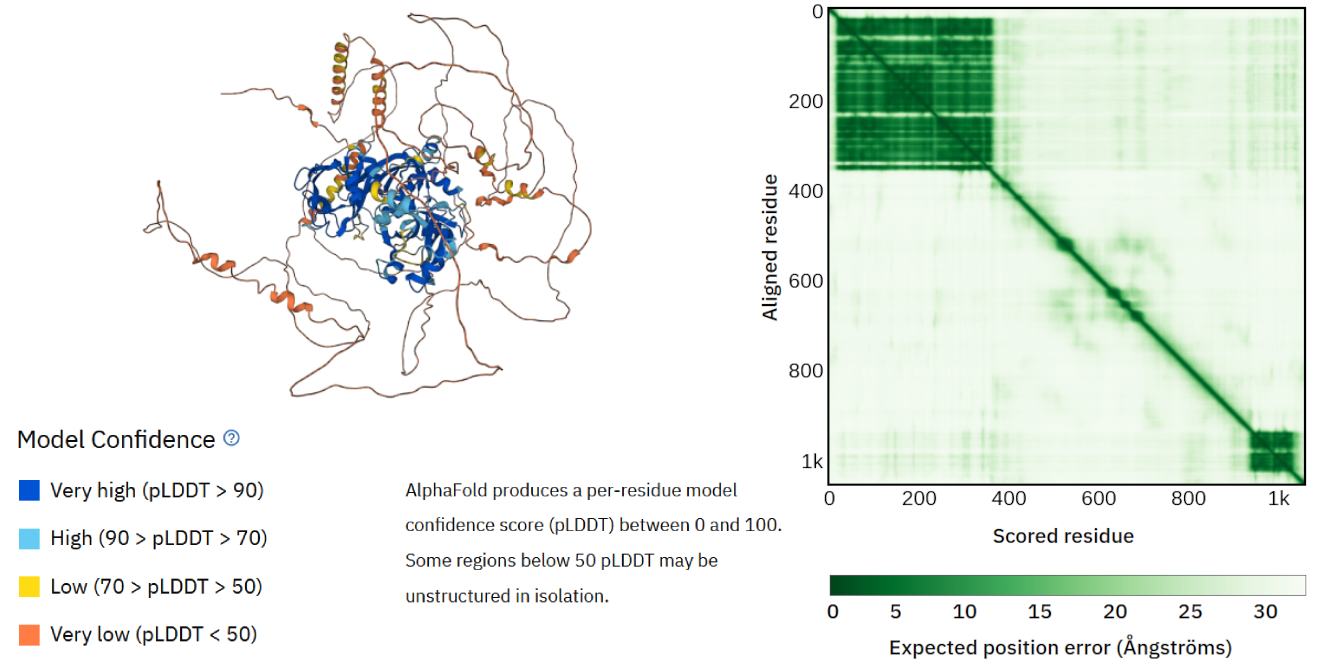

To assess the confidence in the model structure without a direct comparison to a known structure, one needs to assess the uncertainty in the position of the amino acid in the 3D coordinate system. AlphaFold comes with its own local and global error predictions that the machine learning model calculates (see also AlphaFold under the hood), and Figure 16). Where the local error focuses on individual positions of amino acids, the global error describes how confident the predictions are for various protein parts that can interact through residue-residue interactions. The local error is also used to color-code the residues of the model in the 3D structure viewer. In this way, it is easier to observe which parts of the structure model are more reliable than others. You will study these two different error scores more during the practical assignment.

Figure 16:Left: AlphaFold 3D protein structure model of Auxin Response Factor 16. The amino acid residues are colored according to the local confidence score (see AlphaFold-PSD and practical assignment for further explanation). Right: the AlphaFold global error confidence score overview. This view shows low errors (dark green) for two parts of the protein structure model, and higher errors for the remaining structure – these also correspond to less confidence locally (see AlphaFold-PSD and practical assignment for further explanation). The structure model can be used to validate the protein’s predicted function and can act as a starting point for further annotations, such as finding its biological interaction partners (i.e., other proteins, DNA, small molecules). Credits: CC BY 4.0 AlphaFold DB (2022).

When using databases, you may have started to realize that they can also contain erratic entries. To investigate the quality of both known and predicted 3D protein structures, the Ramachandran plot can be used (chapter 1), e.g., by checking for any amino acid residues placed in the non-preferred regions of the Ramachandran plot. You will work with the Ramachandran plot during the practical assignments. It is important to note here that some disordered proteins only come into orderly arrangement in the presence of their various protein partners; and other proteins never have ordered structures under any conditions, a property that may be essential to their function. How to best model the behavior of such proteins is still an area of active research.

The above-described confidence measures are also useful in highlighting limitations of a predictive approach. In the AlphaFold-related practical assignment, you will see some examples of this. The main lesson is that you must treat a prediction as a prediction: it is a model of reality and may not accurately represent it. Also, keep in mind that there are parts of the 3D protein structure that we can naturally be more confident about. For example, secondary structure oftentimes supports the 3D protein structure, and parts of the protein that are naturally more disordered, such as random loops, are harder to predict correctly. Such parts can typically represent parts of the protein structure that are more flexible in their biological environment and any prediction of (very) flexible parts should therefore be considered as a snapshot of the protein structure. If you study Figure 15 in more detail, you will see this reflected in the superimposed image of the PDB (experimental) 3D structure and the AlphaFold structure model. In figure Figure 16 you can see the prediction model is more confident with the less flexible parts in the center of the protein and less confident with the flexible parts on the outer edges of the protein.

A protein structure model: and now?!¶

Imagine you have generated a protein structure model, such as the one in Figure 15. What can you do with it? As mentioned above, it can yield insights into its possible biochemical function and role. In other words, you can start to form hypotheses that can be experimentally tested in the lab. You can also start to make predictions of protein-protein interactions. Since such interactions are typically driven by 3D structural elements such as clefts, pockets, etc., which often serve as active sides, predicting such 3D structure elements from sequences will contribute to more confidently predicting protein-protein interactions. Furthermore, you have seen how comparing protein sequences in multiple sequence alignments helps to gain insight into their evolutationary and functional relationships; by using 3D structure models as an input, a similar comparison could be done at the structural level, i.e., establishing “functional homology”. We are increasingly aware that structure is more conserved than sequence; thus, (multiple) structure alignments at the level of folds or subunits may give a deeper view on protein relationships.

Foldseek¶

A recent tool that allows us to do structure-based alignments based on protein structure input in a reasonable time frame is Foldseek Kempen et al. (2024). Foldseek uses a novel 3D-interactions (3Di) alphabet together with an extremely fast BLAST-like sequence search method. This way, the team behind Foldseek overcame the mounting task of doing structure-based comparisons at the very large scale that the availability of >200 million AlphaFold structures requires. For example, a traditional structure-based alignment tool would take ~1 month to compare one structure to 100 million ones in the database.

During the practical assignments, you will explore how the combination of AlphaFold and Foldseek can be used to explore possible functions for a protein sequence of interest.

Tertiary structure prediction outlook¶

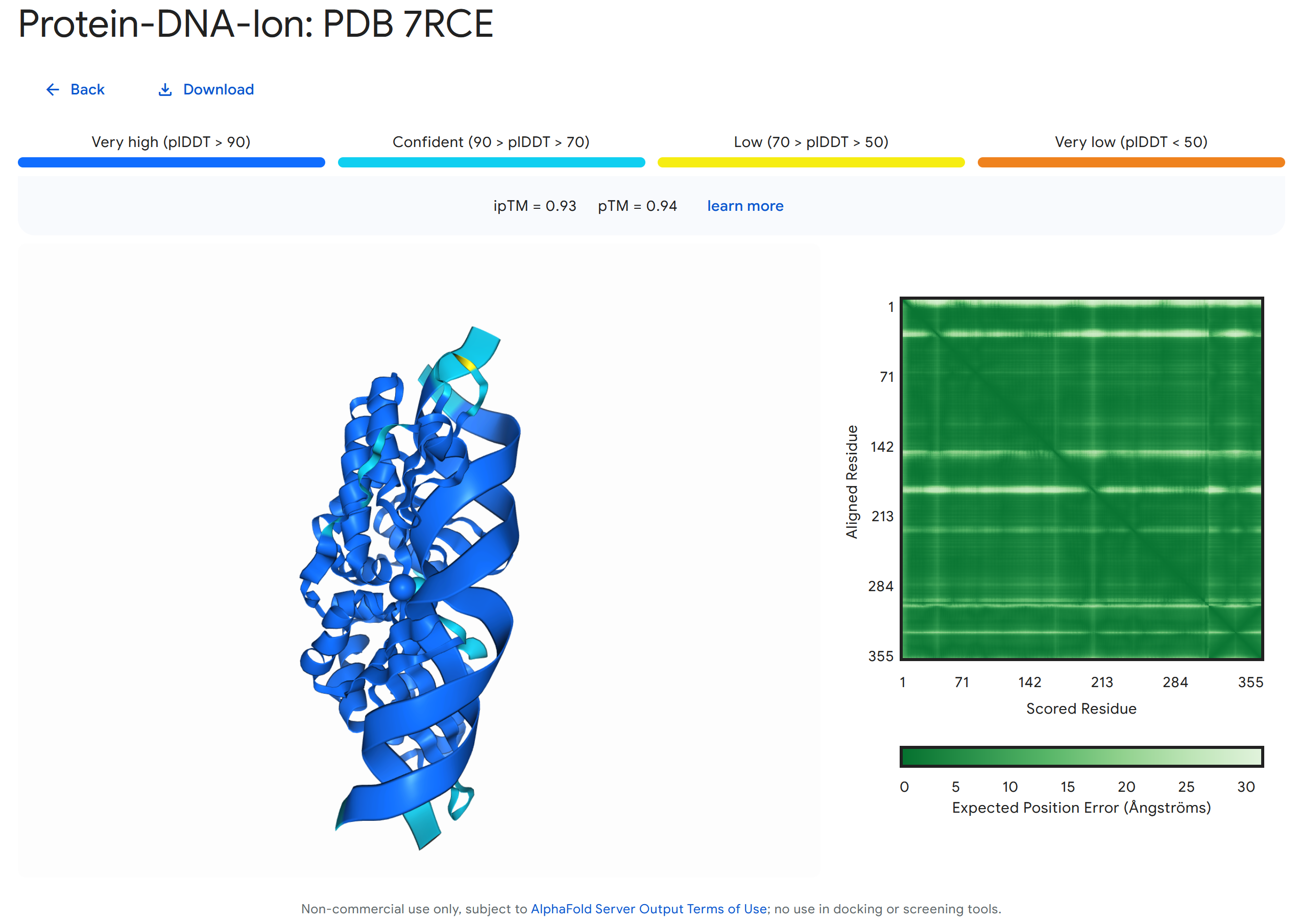

It can be expected that the AlphaFold model will continue to develop. For example, the most recent addition in AlphaFold 3 is joint structure prediction of complexes including proteins, nucleic acids, small molecules, ions, and modified residues (Figure 17).

Figure 17:Example of a joint structure prediction of a 7RCE protein interacting with a section of double helix DNA and two ions (Ca²⁺ and Na⁺) made with AlphaFold Server. Credits: Abramson et al. (2024).

This recent trend indicates a development in structure prediction from singular structure types to multiple structure types and a paradigm shift from sequence to structure based research.

Another topic of interest is modelling protein dynamics. Many proteins can change shape and thereby function, for example depending on cellular conditions, but this is still very hard to model. Finally, we are only starting to explore the role of post-translational modifications in generating many (structurally, functionally) different versions of each protein, so-called proteoforms. Based on its current performance, it will be exciting to see where the field is ten years from now. Akin to the mobile phone - smartphone development we have witnessed over the last decade, we may be surprised by its capabilities by then.

Practical assignments¶

This practical contains questions and exercises to help you process the study materials of Chapter 4. You have 2 mornings to work your way through the exercises. In a single session you should aim to get about halfway through this block, i.e., assignments I-III, but preferably being halfway with assignment IV. These practical exercises offer you the best preparation for the project in chapter 6 and the tools and their use are also part of the exam material. Thus, make sure that you develop your practical skills now, in order to apply them during the project and to demonstrate your observation and interpretation skills during the exam.

Note, the answers will be published after the practical!

Glossary¶

- Ab initio approach

- 3D protein structure prediction approach that are computationally heavy, need human expert input, and model the protein sequence folding process using physicochemical properties of the amino acid residues and their surroundings.

- AlphaFold

- Machine learning-based 3D protein structure prediction approach that uses a deep learning model trained on the entire Protein Data Bank.

- AlphaFold Protein Structure Database (AlphaFold DB)

- Resource that contains over 200 million AlphaFold-predicted 3D protein structure models.

- Critical Assessment of protein Structure Prediction (CASP)

- Community-wide competition where research groups are required to predict 3D structures from protein sequences that do not have any public 3D structure available. It was recently extended with additional challenges such as the prediction of protein-protein interactions, and of protein-ligand interactions.

- DeepTMHMM/TMHMM

- Tools that predict transmembrane sections in proteins using a deep learning model (DeepTMHMM) or hidden markov model (TMHMM) that takes a protein sequence as an input, and then outputs the corresponding per-residue labels.

- Expected position error

- Global error measure predicted by AlphaFold to indicate the confidence in the location of the amino acid residue as compared to all other residues.

- Foldseek

- Tool for large-scale structure-structure based protein alignments based on the 3D-interactions (3Di) alphabet.

- Global Distance Test – Total Score (GDT-TS)

- Evaluation metric for 3D protein structure predictions used by CASP: percentage of α-carbons of the amino acids in the predicted structure are within a threshold distance in Ångstroms percentage of α-carbons of the amino acids in the predicted structure are within a threshold distance in Ångstroms of the known structure, for the best possible alignment of the two.

- Homology modelling

- 3D protein structure prediction approach that uses template structures of experimentally measured protein structures.

- NetSurfP

- Machine learning approach to predict secondary structure elements like α-helices and β-strands using a deep learning model.

- Labelling

- Adding known caterogies to data points, for example the structural state of an amino acid, i.e., α-helix, β-strand, or “random coil”.

- Protein sequence-structure-fucntion paradigm

- This theory postulates that amino acid sequences contain in principle all information to describe their 3D structures, and how protein 3D structures contain in principle all information to define their functions.

- Root Mean Squared Error (RMSE)

- Quality assessment score of how well two 3D protein structures compare to each other, using the difference in position of the α-carbons of the amino acid residues.

- SignalP

- Machine learning based tool that predicts and discriminates between different signal peptides types.

- Signalling peptide

- Peptide recognition signals for the cellular transporter machinery to transport protein to the location of its activity, i.e., the cell membrane or the endoplasmic reticulum.

- Per-residue model confidence score (plDDT)

- Local error measure predicted by AlphaFold to indicate the confidence in the location of the amino acid residue as compared to neighbouring residues in the primary sequence.

- Protein Data Bank (PDB)

- Resource that contains experimentally measured protein 3D structures.

- Threading approach

- 3D protein structure prediction approach that predict the 3D structure of short stretches or folds of proteins, also called fragment-based approach.

- Transmembrane section

- Part of a protein sequence that stretches through the cell membrane.

- Transmembrane protein

- Protein that spans the cell membrane at least once.

- Tree zones (of protein tertiary structure prediction)

- Based on a plot of percentage of sequence identity versus alignment length of a query protein sequence versus database entries, three “zones” can be defined. Depending on in which zone the best match falls for a query sequence, different traditional approaches are required to predict (part of) its 3D structure.

- Rubiera, C. O. (2021). AlphaFold 2 is here: what’s behind the structure prediction miracle. https://www.blopig.com/blog/2021/07/alphafold-2-is-here-whats-behind-the-structure-prediction-miracle/

- de Ridder, D., Kupczok, A., Holmer, R., Bakker, F., van der Hooft, J., Risse, J., Navarro, J., & Sardjoe, T. (2024). Self-created figure.

- Høie, M. H., Kiehl, E. N., Petersen, B., Nielsen, M., Winther, O., Nielsen, H., Hallgren, J., & Marcatili, P. (2022). NetSurfP-3.0: accurate and fast prediction of protein structural features by protein language models and deep learning. Nucleic Acids Research, 50(W1), W510–W515. 10.1093/nar/gkac439

- Eswaramoorthy, S., Swaminathan, S., & Burley, S. K. (1999). CRYSTAL STRUCTURE OF YEAST HYPOTHETICAL PROTEIN YBL036C-SELENOMET CRYSTAL. 10.1107/S0907444902018012

- Foobar. (2006). Polytopic membrane protein. https://commons.wikimedia.org/wiki/File:Polytopic_membrane_protein.png

- Yikrazuul. (2010). Signal sequence. https://commons.wikimedia.org/wiki/File:Signal_sequence.svg

- Hallgren, J., Tsirigos, K. D., Pedersen, M. D., Armenteros, J. J. A., Marcatili, P., Nielsen, H., Krogh, A., & Winther, O. (2022). DeepTMHMM predicts alpha and beta transmembrane proteins using deep neural networks. Web Server. https://doi.org/10.1101/2022.04.08.487609

- Teufel, F., Almagro Armenteros, J. J., Johansen, A. R., Gíslason, M. H., Pihl, S. I., Tsirigos, K. D., Winther, O., Brunak, S., von Heijne, G., & Nielsen, H. (2022). SignalP 6.0 predicts all five types of signal peptides using protein language models. Nature Biotechnology, 40(7), 1023–1025. 10.1038/s41587-021-01156-3

- Berman, H. M., Westbrook, J., Feng, Z., Gilliland, G., Bhat, T. N., Weissig, H., Shindyalov, I. N., & Bourne, P. E. (2024). PDB Statistics. https://www.rcsb.org/stats/

- Callaway, E. (2020). ‘It will change everything’: DeepMind’s AI makes gigantic leap in solving protein structures. Nature, 588, 203–204. 10.1038/d41586-020-03348-4

- LociOiling. (2018). Alpha carbon. https://foldit.fandom.com/wiki/Alpha_carbon

- Abramson, J., Adler, J., Dunger, J., Evans, R., Green, T., Pritzel, A., Ronneberger, O., Willmore, L., Ballard, A. J., Bambrick, J., Bodenstein, S. W., Evans, D. A., Hung, C.-C., O’Neill, M., Reiman, D., Tunyasuvunakool, K., Wu, Z., Žemgulytė, A., Arvaniti, E., … Jumper, J. M. (2024). Accurate structure prediction of biomolecular interactions with AlphaFold 3. Nature. 10.1038/s41586-024-07487-w

- Jumper, J., Evans, R., Pritzel, A., Green, T., Figurnov, M., Ronneberger, O., Tunyasuvunakool, K., Bates, R., Žídek, A., Potapenko, A., Bridgland, A., Meyer, C., Kohl, S. A. A., Ballard, A. J., Cowie, A., Romera-Paredes, B., Nikolov, S., Jain, R., Adler, J., … Hassabis, D. (2021). Highly accurate protein structure prediction with AlphaFold. Nature, 596, 583–589. 10.1038/s41586-021-03819-2

- Hassabis, D. (2022). AlphaFold reveals the structure of the protein universe. https://deepmind.google/discover/blog/alphafold-reveals-the-structure-of-the-protein-universe/

- AlphaFold DB. (2022). Auxin response factor 16. https://alphafold.ebi.ac.uk/entry/A3B9A0